Introduction

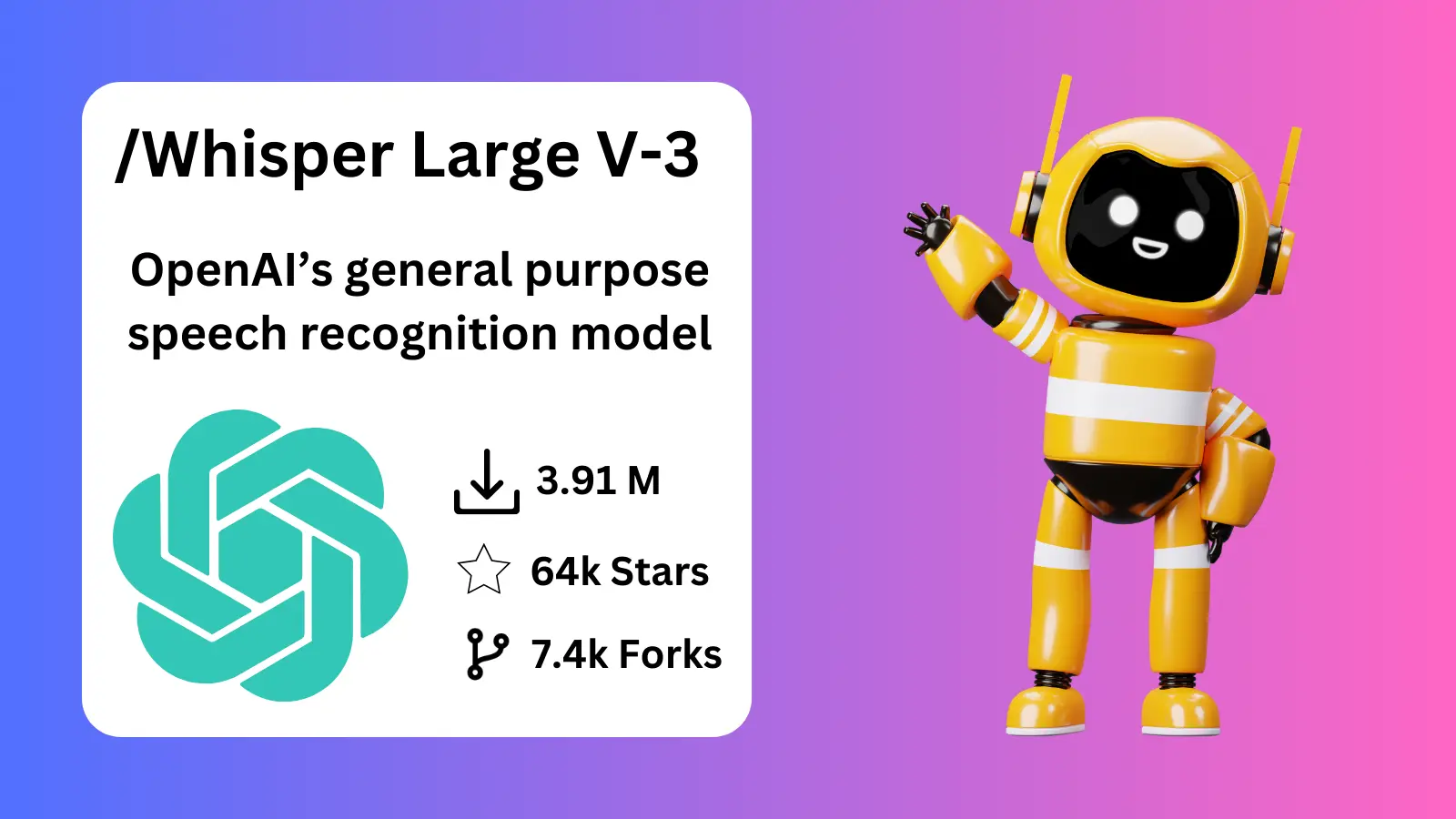

Whisper, developed by OpenAI, is a state-of-the-art automatic speech recognition (ASR) and speech translation model that has demonstrated impressive performance across a wide range of languages and domains. Trained on a massive dataset of 680k hours of labeled audio data, Whisper models exhibit a strong ability to generalize without the need for fine-tuning.

The latest iteration, Whisper large-v3, builds upon the previous large models with minor architectural changes and improved performance. This technical report will delve into the details of the Whisper large-v3 model, including its architecture, training data, performance, and limitations.

Model Architecture

Whisper is a Transformer-based encoder-decoder model, also known as a sequence-to-sequence model. The large-v3 model has the same architecture as the previous large models, with the exception of two minor differences:

- The input uses 128 Mel frequency bins instead of 80.

- A new language token for Cantonese has been added.

The model was trained on a mixture of 1 million hours of weakly labeled audio and 4 million hours of pseudolabeled audio collected using Whisper large-v2. The training process spanned 2.0 epochs over this dataset.

Whisper checkpoints come in five configurations of varying model sizes: tiny, base, small, medium, and large. The large-v3 model has 1550 million parameters and is only available in a multilingual configuration, trained on both speech recognition and speech translation tasks.

Training Data

The Whisper large-v3 model was trained on a massive dataset of 1 million hours of weakly labeled audio and 4 million hours of pseudolabeled audio collected using Whisper large-v2. This dataset was a mixture of English-only and multilingual data.

For speech recognition tasks, the model was trained to predict transcriptions in the same language as the input audio. For speech translation tasks, the model was trained to predict transcriptions in a different language from the input audio.

The performance of the model on transcription tasks was directly correlated with the amount of training data available for each language. This suggests that the model's capabilities are heavily dependent on the diversity and quantity of the training data.

Fine-Tuning and Evaluated Use

While the pre-trained Whisper model demonstrates strong generalization capabilities, its performance can be further improved for specific languages and tasks through fine-tuning. The blog post Fine-Tune Whisper with 🤗 Transformers provides a step-by-step guide to fine-tuning the Whisper model with as little as 5 hours of labeled data.

The primary intended users of the Whisper models are AI researchers studying robustness, generalization, capabilities, biases, and constraints of the current model. However, Whisper can also be useful as an ASR solution for developers, especially for English speech recognition.

It is important to note that the models are primarily trained and evaluated on ASR and speech translation to English tasks. While they show strong ASR results in approximately 10 languages, they may exhibit additional capabilities if fine-tuned on certain tasks like voice activity detection, speaker classification, or speaker diarization. However, these capabilities have not been robustly evaluated.

Performance and Limitations

Studies have shown that Whisper models exhibit improved robustness to accents, background noise, technical language, and zero-shot translation from multiple languages into English compared to many existing ASR systems. The accuracy on speech recognition and translation tasks is near state-of-the-art levels.

However, due to the weakly supervised training approach using large-scale noisy data, the model's predictions may include texts that are not actually spoken in the audio input (i.e., hallucination). This behavior is hypothesized to occur because the model combines trying to predict the next word in the audio with trying to transcribe the audio itself, given its general knowledge of language.

The models perform unevenly across languages, with lower accuracy on low-resource and/or low-discoverability languages or languages where less training data is available. Disparate performance is also observed on different accents and dialects of particular languages, which may include higher word error rates across speakers of different genders, races, ages, or other demographic criteria.

Additionally, the sequence-to-sequence architecture of the model makes it prone to generating repetitive texts, which can be mitigated to some degree by beam search and temperature scheduling but not perfectly. Further analysis on these limitations is provided in the paper accompanying the model release.

Usage and Implementation

Whisper large-v3 is supported in the Hugging Face 🤗 Transformers library. To use the model, the Transformers library and 🤗 Datasets (for loading audio datasets) need to be installed.

The model can be used for short-form transcription (audio files < 30 seconds) using the pipeline class. The language of the source audio can be specified if known, and the model will automatically predict the language if not provided.

For long-form transcription (audio files > 30 seconds), two algorithms are available: chunked long-form and sequential long-form. The chunked algorithm is faster, up to 9x compared to OpenAI's sequential implementation, and should be used when transcription speed is the priority. The sequential algorithm, on the other hand, provides more accurate transcriptions and should be used when accuracy is the primary concern or when transcribing batches of long audio files.

FAQ

-

What is the Whisper model?

Whisper is a state-of-the-art automatic speech recognition (ASR) and speech translation model developed by OpenAI. It is a Transformer-based encoder-decoder model trained on a massive dataset of 680k hours of labeled audio data. -

What are the different Whisper model configurations?

Whisper models come in five different configurations: tiny, base, small, medium, and large. The large-v3 model, which is the focus of this report, has 1550 million parameters. -

How does the Whisper large-v3 model differ from previous versions?

The large-v3 model has two minor architectural changes compared to the previous large models: it uses 128 Mel frequency bins instead of 80, and it includes a new language token for Cantonese. -

What is the performance of the Whisper large-v3 model?

Whisper large-v3 exhibits state-of-the-art performance on speech recognition and translation tasks, particularly in terms of robustness to accents, background noise, and zero-shot translation. However, it may suffer from hallucinations and uneven performance across languages due to the weakly supervised training approach. -

How can the Whisper model be fine-tuned?

The Whisper model can be fine-tuned for specific languages and tasks using as little as 5 hours of labeled data, as described in the "Fine-Tune Whisper with 🤗 Transformers" blog post. -

Who are the primary users of the Whisper model?

The primary intended users of the Whisper models are AI researchers studying robustness, generalization, capabilities, biases, and constraints of the current model. However, the model can also be useful as an ASR solution for developers, especially for English speech recognition. -

What are the limitations of the Whisper model?

The Whisper model's limitations include hallucinations, uneven performance across languages, and a tendency to generate repetitive texts. It also primarily focuses on ASR and speech translation to English tasks, and its capabilities in other areas like voice activity detection or speaker diarization have not been robustly evaluated. -

How can the Whisper model be used in practice?

The Whisper large-v3 model is supported in the Hugging Face 🤗 Transformers library and can be used for both short-form and long-form transcription. The chunked long-form algorithm is faster, while the sequential long-form algorithm provides more accurate transcriptions.

References

- Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI blog, 1(8), 9.

- Sanh, V., Debut, L., Chaumond, J., & Wolf, T. (2019). DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108.

- Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Baevski, A., Zhou, Y., Mohamed, A., & Auli, M. (2020). wav2vec 2.0: A framework for self-supervised learning of speech representations. Advances in Neural Information Processing Systems, 33, 12449-12460.

- Kahn, J., Lee, A., & Hannun, A. (2020). Self-training for end-to-end speech recognition. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 7084-7088). IEEE.