Artificial intelligence enthusiasts and developers alike have eagerly awaited the unveiling of Meta's latest breakthrough in the realm of large language models (LLMs). The Meta Llama 3 family promises not just innovation but also a steadfast commitment to responsible AI development. In this blog, we delve into the technical intricacies and ethical considerations surrounding the Meta Llama 3, exploring its features, architecture, training methodology, performance benchmarks, and the broader implications for the AI community.

Introducing Meta Llama 3

On April 18, 2024, Meta made waves in the AI landscape with the release of the Meta Llama 3 family, comprising two distinct variations: an 8-billion-parameter model and a staggering 70-billion-parameter model. These models represent the pinnacle of LLM technology, optimized to excel in dialogue-based interactions while upholding principles of safety and helpfulness.

- Variations: Meta Llama 3 comes in two variations: an 8-billion-parameter model and a staggering 70-billion-parameter model, both optimized for dialogue-based interactions.

- Focus: The models are optimized to excel in dialogue-based interactions while prioritizing safety and helpfulness.

Model Architecture and Training Methodology

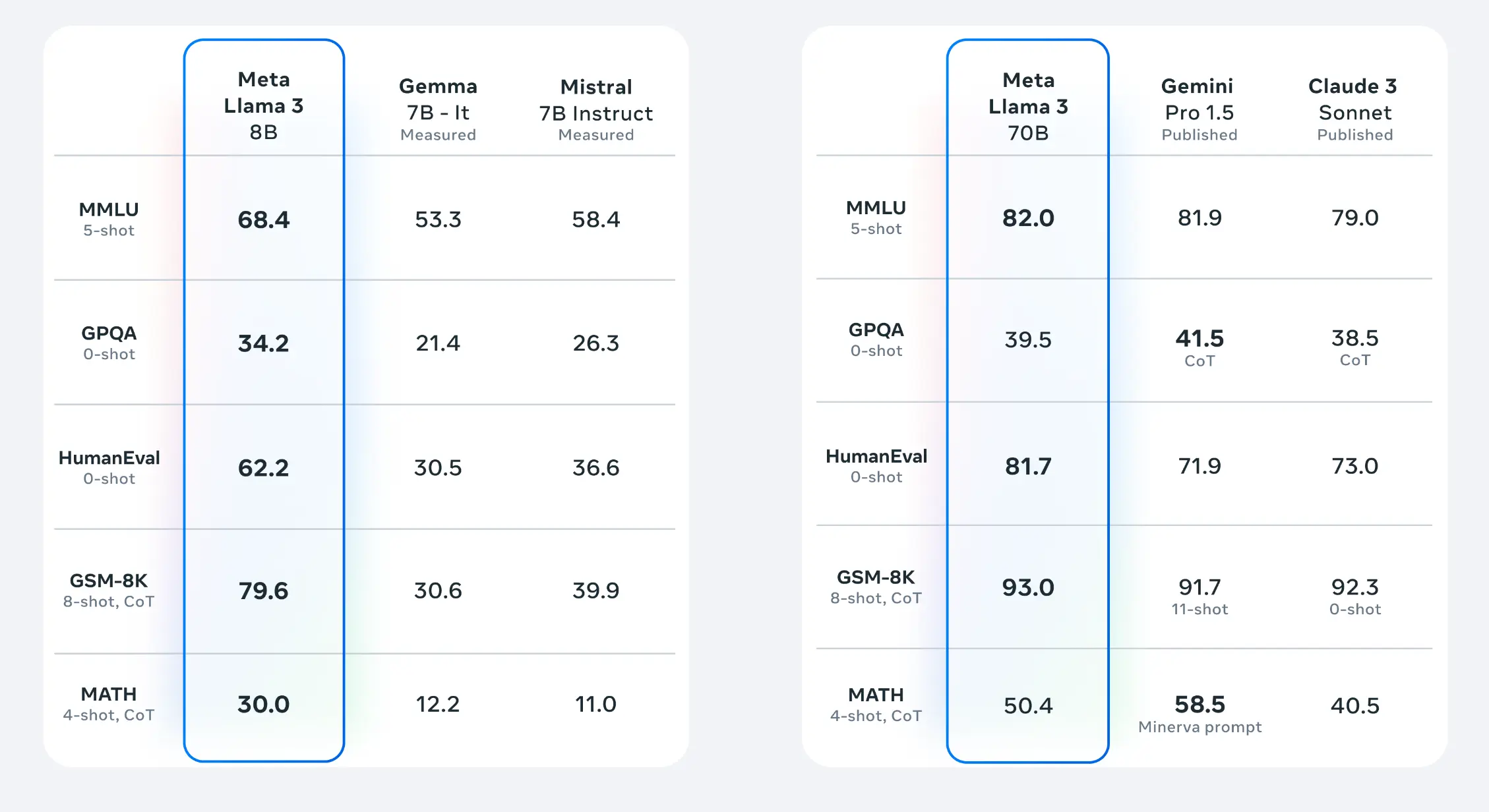

Image Source: Meta Llama 3 Instruct Model Performance

At the heart of Meta Llama 3 lies an autoregressive architecture built upon an optimized transformer model. Leveraging supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF), Meta ensures that the model aligns with user preferences for safety and helpfulness. The training data, amassed from a diverse array of publicly available sources, exceeds a staggering 15 trillion tokens, underscoring Meta's commitment to data-driven development. Furthermore, the freshness of the data, with a cutoff in March 2023 for the 8B model and December 2023 for the 70B model, reflects Meta's dedication to utilizing the most relevant and up-to-date information.

- Architecture: At the heart of Meta Llama 3 lies an autoregressive architecture built upon an optimized transformer model.

- Training: Leveraging supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF), Meta ensures alignment with user preferences for safety and helpfulness.

- Dataset: The training data, exceeding 15 trillion tokens, is sourced from diverse publicly available sources with a cutoff in March 2023 for the 8B model and December 2023 for the 70B model.

Performance Benchmarks

From standard benchmarks like MMLU (Meta Multi-Language Understanding) to specialized evaluations in knowledge reasoning and reading comprehension, Llama 3 consistently outperforms its predecessors and competitors. Its prowess in natural language understanding tasks positions it as a frontrunner in the race for AI supremacy.- Superior Performance: Meta Llama 3 sets new standards in performance across various evaluation metrics, surpassing both predecessors and competitors.

- Metrics: From MMLU (Meta Multi-Language Understanding) to specialized evaluations in knowledge reasoning and reading comprehension, Llama 3 consistently outperforms.

Responsible AI Development

Meta's commitment to responsible AI development is evident throughout the Meta Llama 3 project. Extensive testing, including red teaming exercises and safety assessments, underscores Meta's proactive approach to identifying and mitigating potential risks associated with model deployment. Moreover, Meta actively engages with the open community, contributing to safety standardization efforts and fostering collaboration. The Responsible Use Guide outlines best practices for developers, emphasizing the importance of transparency, accountability, and inclusivity in AI development.

- Testing: Extensive testing, including red teaming exercises and safety assessments, underscores Meta's proactive approach to risk mitigation.

- Community Engagement: Meta actively engages with the open community, contributing to safety standardization efforts and fostering collaboration.

- Guidelines: The Responsible Use Guide outlines best practices for developers, emphasizing transparency, accountability, and inclusivity.

Ethical Considerations

While Meta Llama 3 represents a significant leap forward in AI technology, it is not without its ethical considerations. Developers are urged to conduct thorough safety testing and implement safeguards, such as the Meta Llama Guard, to address potential biases and ensure responsible deployment. Furthermore, Meta emphasizes the importance of inclusivity and diversity, striving to serve the needs of a broad spectrum of users and communities.

- Safety Measures: Developers are urged to conduct thorough safety testing and implement safeguards, such as the Meta Llama Guard, to address potential biases.

- Inclusivity: Meta emphasizes serving the needs of diverse users and communities, striving for inclusivity and diversity in AI development.

Conclusion

The release of Meta Llama 3 heralds a new era in the evolution of AI language models. With its unparalleled performance, commitment to safety, and dedication to ethical development, Meta Llama 3 sets a gold standard for intelligent conversational agents. As developers and researchers continue to push the boundaries of AI technology, Meta Llama 3 stands as a beacon of innovation and responsibility in the ever-evolving landscape of artificial intelligence.

For further details, including citation instructions and licensing details, please refer to the official Meta Llama 3 Model Card.

FAQs

- What is Meta Llama 3?

A cutting-edge large language model (LLM) designed for dialogue-based interactions, prioritizing safety and helpfulness. - What are the different variations of Meta Llama 3?

It comes in two models: 8 billion and 70 billion parameters, both excelling in conversational AI. - What is the architecture behind Meta Llama 3?

It utilizes an autoregressive architecture built on an optimized transformer model. - How is Meta Llama 3 trained?

Through supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) for user alignment. - What kind of data is used to train Meta Llama 3?

A massive dataset exceeding 15 trillion tokens from diverse publicly available sources. - How does Meta Llama 3 perform compared to others?

It sets new benchmarks in performance, surpassing predecessors and competitors across various metrics. - How does Meta ensure responsible development of Meta Llama 3?

Extensive testing, safety assessments, open community engagement, and clear responsible use guidelines. - What are the ethical considerations surrounding Meta Llama 3?

Developers need to conduct thorough safety testing, implement safeguards, and prioritize inclusivity. - What are the potential applications of Meta Llama 3?

It can be used in various fields like search, recommendations, content generation, and intelligent assistants. - Where can I find more information about Meta Llama 3?

Refer to the official Meta Llama 3 Model Card for details, citation instructions, and licensing information.