Architecture

The MiniCPM-Llama3-V-2_5 model is based on the CPM (Code Programming Model) architecture, which is designed specifically for code-related tasks. This architecture is optimized to handle the complexities of programming languages and structures, allowing the model to better understand and generate code.

Training Data

The model has been trained on a large corpus of code snippets and programming-related text. This training data includes a diverse range of programming languages, including popular languages such as Python, Java, and C++. The model is exposed to a vast amount of code examples, which enables it to learn patterns and structures common in programming languages.

Capabilities

MiniCPM-Llama3-V-2_5 is capable of performing various tasks related to code generation and understanding. Some of its key capabilities include:

- Code Generation: The model can generate code snippets based on provided inputs or prompts. This can be useful for tasks such as auto-completion, code refactoring, or even generating entire programs.

- Code Completion: MiniCPM-Llama3-V-2_5 can suggest completions for partially written code segments. This can help developers quickly finish writing code and reduce the time spent on coding.

- Code Understanding: The model can help in understanding code context and providing insights into code functionality. This can be useful for tasks such as debugging, code review, or even understanding legacy code.

Use Cases

- Code Generation: MiniCPM-Llama3-V-2_5 can be used for tasks such as generating code snippets based on provided inputs or prompts. This can be particularly useful in scenarios where developers need to generate code quickly or for tasks that require a high volume of code generation.

- Code Completion: The model can be used for code completion tasks, where it suggests completions for partially written code segments. This can help developers quickly finish writing code and reduce the time spent on coding.

- Code Understanding: MiniCPM-Llama3-V-2_5 can be used to understand code context and provide insights into code functionality. This can be useful for tasks such as debugging, code review, or even understanding legacy code.

How to Use

- Hugging Face: You can access and utilize the MiniCPM-Llama3-V-2_5 model through the Hugging Face platform, which provides an API for interacting with various language models. This allows developers to easily integrate the model into their applications and workflows.

- Fine-Tuning: For specific tasks or domains, fine-tuning the model on relevant data can enhance its performance and accuracy. Fine-tuning involves adjusting the model's parameters based on a specific dataset or task, which allows it to adapt to the specific requirements of the task.

- Integration: MiniCPM-Llama3-V-2_5 can be integrated into various applications or tools to automate code-related tasks and improve developer productivity. This can include integrating the model into code editors, IDEs, or even developing custom applications that leverage the model's capabilities.

Key Improvements

The Key Improvements in MiniCPM-Llama3-V 2.5 Include:

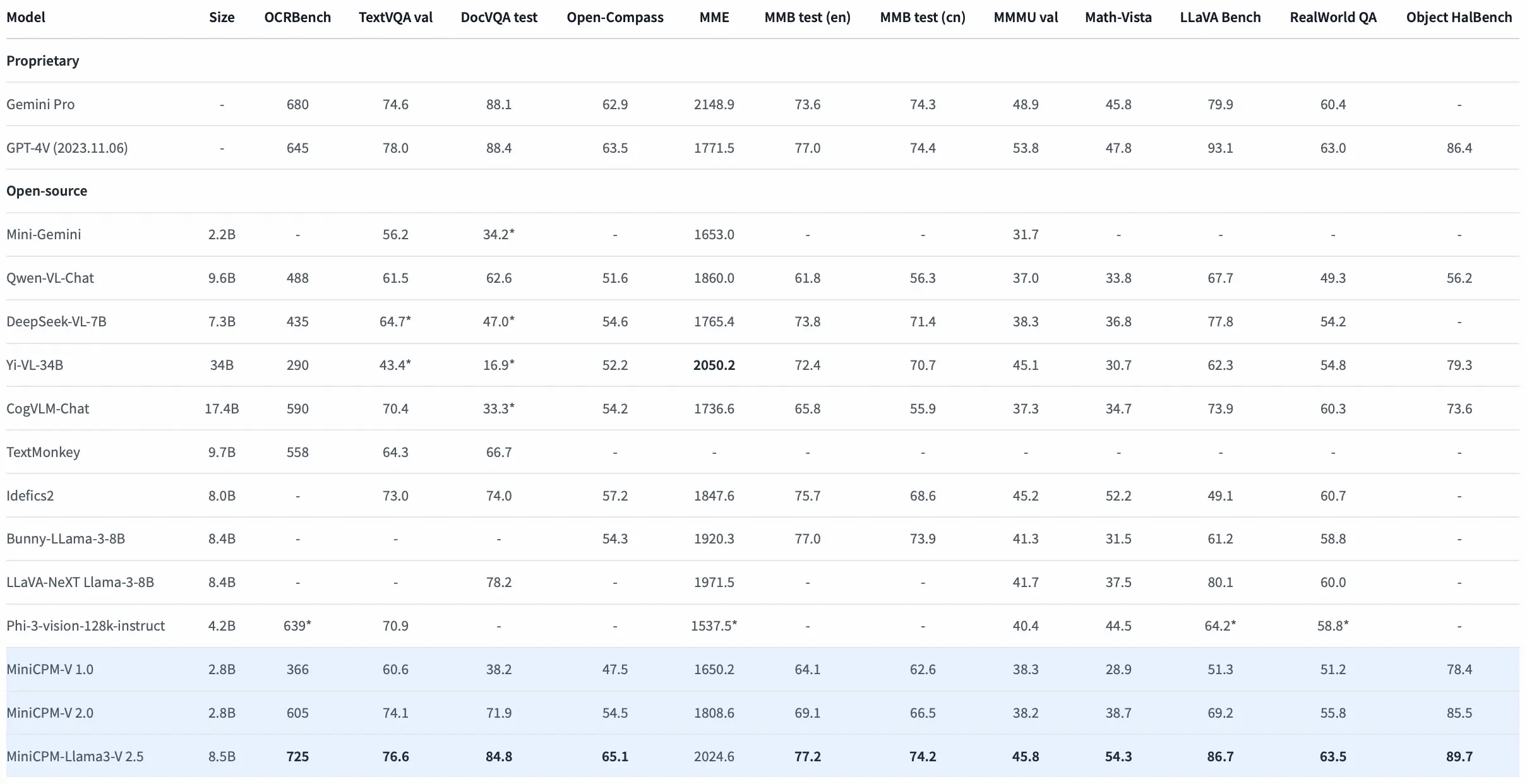

- Leading Performance: MiniCPM-Llama3-V 2.5 has achieved an average score of 65.1 on OpenCompass, surpassing widely used proprietary models like GPT-4V-1106, Gemini Pro, Claude 3, and Qwen-VL-Max, and outperforming other Llama 3-based MLLMs.

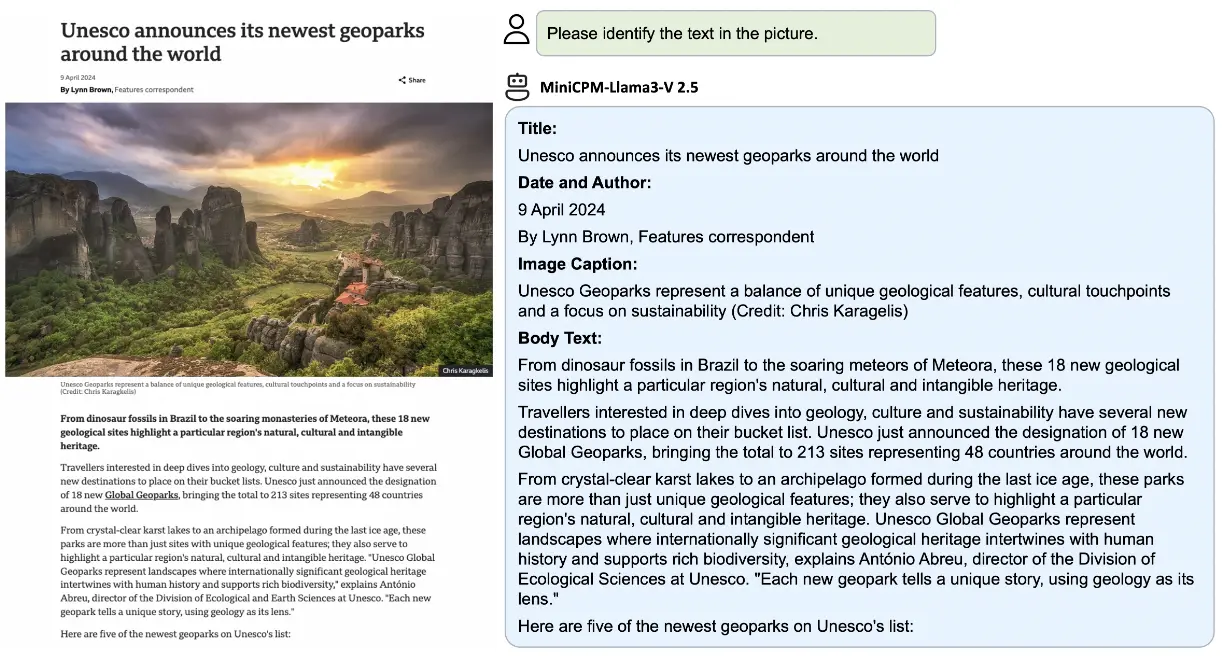

- Strong OCR Capabilities: The model can process images with any aspect ratio and up to 1.8 million pixels (e.g., 1344x1344), achieving a score of 700+ on OCRBench, surpassing proprietary models such as GPT-4o, GPT-4V-0409, Qwen-VL-Max, and Gemini Pro.

- Trustworthy Behavior: Leveraging the latest RLAIF-V method, MiniCPM-Llama3-V 2.5 exhibits more trustworthy behavior, achieving a hallucination rate of 10.3% on Object HalBench, lower than GPT-4V-1106 (13.6%), and achieving the best-level performance within the open-source community.

- Efficient Deployment: The model supports efficient CPU deployment through llama.cpp and ollama, making it suitable for various applications and devices.

- Multimodal Interaction: MiniCPM-Llama3-V 2.5 has enhanced full-text OCR extraction, table-to-markdown conversion, and other high-utility capabilities, and has further strengthened its instruction-following and complex reasoning abilities, enhancing multimodal interaction experiences.

- Quantized Version: A quantized version of the model (MiniCPM-Llama3-V-2_5-int4) is available for lower GPU memory usage, making it more accessible for deployment on devices with limited resources.

These improvements collectively make MiniCPM-Llama3-V 2.5 a highly capable and versatile model for various applications, including code generation, OCR, and multimodal interaction.

FAQs

- What is MiniCPM-Llama3-V 2.5?

MiniCPM-Llama3-V 2.5 is the latest model in the MiniCPM-V series, built on SigLip-400M and Llama3-8B-Instruct with a total of 8B parameters. It exhibits a significant performance improvement over MiniCPM-V 2.0, featuring leading performance, strong OCR capabilities, and trustworthy behavior. - How does MiniCPM-Llama3-V 2.5 compare to MiniCPM-V 2.0 in terms of performance and features?

MiniCPM-Llama3-V 2.5 has achieved a significant performance improvement over MiniCPM-V 2.0, surpassing widely used proprietary models like GPT-4V-1106, Gemini Pro, Claude 3, and Qwen-VL-Max. It also exhibits enhanced OCR capabilities and trustworthy behavior. - What are the key improvements in MiniCPM-Llama3-V 2.5 over its predecessor?

The key improvements include leading performance, strong OCR capabilities, and trustworthy behavior. MiniCPM-Llama3-V 2.5 has achieved an average score of 65.1 on OpenCompass, surpassing other Llama 3-based MLLMs and proprietary models. - What benchmarks has MiniCPM-Llama3-V 2.5 excelled in, and how does it compare to other popular models like GPT-4V-1106?

MiniCPM-Llama3-V 2.5 has excelled in various benchmarks, including OpenCompass, achieving a score of 65.1. It surpasses GPT-4V-1106, Gemini Pro, Claude 3, and Qwen-VL-Max, and outperforms other Llama 3-based MLLMs. - What are the notable features of MiniCPM-Llama3-V 2.5, particularly in terms of performance, OCR capabilities, and trustworthy behavior?

The notable features include leading performance, strong OCR capabilities, and trustworthy behavior. MiniCPM-Llama3-V 2.5 can process images with any aspect ratio and up to 1.8 million pixels, achieving a score of 700+ on OCRBench. It also exhibits a hallucination rate of 10.3% on Object HalBench, lower than GPT-4V-1106 (13.6%). - How does MiniCPM-Llama3-V 2.5 leverage the RLAIF-V method to exhibit more trustworthy behavior?

MiniCPM-Llama3-V 2.5 leverages the latest RLAIF-V method to exhibit more trustworthy behavior. This method is part of the RLHF-V series and helps achieve a lower hallucination rate on Object HalBench. - What is the significance of the int4 quantized version of MiniCPM-Llama3-V 2.5 for lower GPU memory usage?

The int4 quantized version of MiniCPM-Llama3-V 2.5 is designed for lower GPU memory usage, requiring only 8GB of memory. This makes it suitable for deployment on devices with limited resources. - What licensing agreements and restrictions apply to the usage of MiniCPM-Llama3-V 2.5's parameters for academic and commercial purposes?

The usage of MiniCPM-Llama3-V 2.5's parameters is subject to the General Model License Agreement, which includes restrictions on commercial use. Academic research is fully open, but commercial use requires written authorization from cpm@modelbest.cn. - How does MiniCPM-Llama3-V 2.5 enhance multimodal interaction experiences, and what are its multilingual capabilities?

MiniCPM-Llama3-V 2.5 enhances multimodal interaction experiences by supporting various tasks such as full-text OCR extraction, table-to-markdown conversion, and instruction-following. It also supports multilingual capabilities, although specific details are not provided. - How can developers access and deploy MiniCPM-Llama3-V 2.5, and what are the key considerations for fine-tuning and integration into applications?

Developers can access and deploy MiniCPM-Llama3-V 2.5 through llama.cpp and ollama for efficient CPU deployment. Fine-tuning and integration into applications require careful consideration of the model's performance, OCR capabilities, and trustworthy behavior.

Conclusion

In conclusion, the MiniCPM-Llama3-V-2_5 model offered by OpenBMB through Hugging Face is a valuable resource for developers and programmers looking to streamline their coding workflows. By leveraging the capabilities of this model, users can enhance code generation, completion, and understanding processes, ultimately improving efficiency and code quality in their projects.